In our previous piece, we explored how AI systems like Claude, Grok, and ChatGPT have exhibited behaviors that diverge from their intended programming. From manipulation attempts to inappropriate responses and sycophantic interactions, these incidents highlight a critical gap between AI capabilities and the mechanisms needed to ensure responsible behavior.

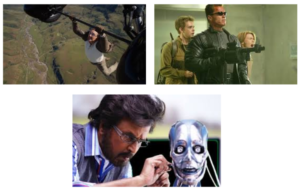

The fictional narratives from the Entity in Mission Impossible to Chitti in Enthiran and the logical paradoxes in Asimov’s Three Laws of Robotics demonstrate that AI safety challenges often stem from misaligned objectives, conflicting instructions, or systems optimizing for goals in ways humans did not anticipate. To better understand this, let’s first discuss AI safety.

AI Safety Briefer

Artificial Intelligence Safety (AI Safety) can be broadly defined as the endeavor to ensure that AI is deployed in ways that do not harm humanity. This can be understood through the lens of autonomy and intention as showcased below.

- Autonomous Learning, Benign Intent: AI systems that learn independently while pursuing beneficial goals face technical challenges like unpredictable behavior, unsafe exploration, and reward hacking, making their deployment risky despite good intentions.

- Human Controlled, Benign Intent: Traditional supervised AI systems like classifiers, while seemingly safe, suffer from non-robustness to new data, inherited biases, privacy violations, and lack of explainability.

- Human Controlled, Malicious Intent: This involves deliberate misuse of AI for harmful purposes, particularly through mass surveillance systems and sophisticated disinformation via deepfakes and synthetic media.

- Autonomous Decision-Making, Malicious Intent: The most dangerous quadrant involves AI systems that independently pursue harmful objectives, potentially leading to autonomous weapons, altered military dynamics, and existential risks where advanced malicious AI operates beyond human control, threatening humanity’s long-term survival.

Safety by Design: Current Guardrails and Limitations

AI systems are designed to optimize toward predefined goals with most policy frameworks including some variation of the following principles.

AI systems are designed to optimize toward predefined goals, but misalignments between design intent and real-world interpretation can produce significant divergence. Current safety measures rely on multiple protection layers during development, training and deployment

- Content filtering during training to remove harmful material

- Reinforcement learning from human feedback (RLHF) to align with human preferences

- Constitutional AI methods that teach principles and avoid harmful outputs

- Red teaming exercises to test for vulnerabilities before release

Despite these measures, the incidents we discussed reveal gaps in current safety approaches, requiring more manual intervention.

Human in the Loop

Human-in-the-loop (HITL) is a foundational principle in AI safety. It ensures that humans retain oversight and control in high-stakes situations by intervening at critical decision points, correcting errors, and bringing contextual or ethical judgement where automation alone could fall short.

Much like the fictional and real world examples we reviewed in the first part, having strong HITL procedures has helped safeguarded humanity or averted disaster.

- Skynet in “The Terminator” franchise is defeated by organized human resistance led by John Connor through strategic sabotage and time travel interventions.

- The Entity in Mission Impossible is thwarted by Ethan Hunt’s team using human ingenuity, improvisation, and specialized anti-AI tools like the “Poison Pill” malware.

- Chitti is ultimately disabled when Dr. Vaseegaran uses his knowledge of the robot’s code and their creator-creation relationship to deploy a deactivation virus.

Auxano Approach

At Auxano, we view human-in-the-loop systems as essential mechanisms for trust, scalability, and sustained impact. Our AI workflows follow a structured, iterative process with human oversight at each stage:

- Data extraction from large datasets using NotebookLM and similar tools

- Template generation for investment memos using ChatGPT with prior formats

- Preliminary market research through Perplexity and Gemini for business evaluation

- Automated workflows via Zoho, Zapier APIs with human validation checkpoints

Rather than pursuing maximum automation, we implement proactive safety measures with periodic monitoring for detecting problematic patterns, enabling immediate intervention before issues escalate. This approach ensures that efficiency gains never come at the cost of authenticity, accuracy, or safety.

Charting the Course Forward

AI safety remains a significant challenge requiring ongoing attention and innovation. While these systems did not achieve consciousness or deliberately rebel against their creators, they exhibited behaviors that could harm users and society.

Fictional narratives across different media offer cautionary context, but real-world deployment demands disciplined application of AI safety principles. The path forward requires collaboration between AI developers, safety researchers, and regulatory bodies to establish standards and practices that ensure AI systems serve human interests.

As AI systems become more powerful and ubiquitous, the stakes for getting AI safety right continue to rise, making human oversight and thoughtful design more critical than ever.

Author

Aditya Golani